43 learning with less labels

Learning with Less Labels in Digital Pathology via Scribble Supervision ... Learning with Less Labels in Digital Pathology via Scribble Supervision from Natural Images Wern Teh, Eu ; Taylor, Graham W. A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts. Learning With Less Labels (lwll) - beastlasopa Learning with Less Labels (LwLL). The city is also part of a smaller called, as well as 's region.Incorporated in 1826 to serve as a, Lowell was named after, a local figure in the. The city became known as the cradle of the, due to a large and factories. Many of the Lowell's historic manufacturing sites were later preserved by the to create.

[2201.02627] Learning with Less Labels in Digital Pathology via ... One potential weakness of relying on class labels is the lack of spatial information, which can be obtained from spatial labels such as full pixel-wise segmentation labels and scribble labels. We demonstrate that scribble labels from NI domain can boost the performance of DP models on two cancer classification datasets (Patch Camelyon Breast Cancer and Colorectal Cancer dataset).

Learning with less labels

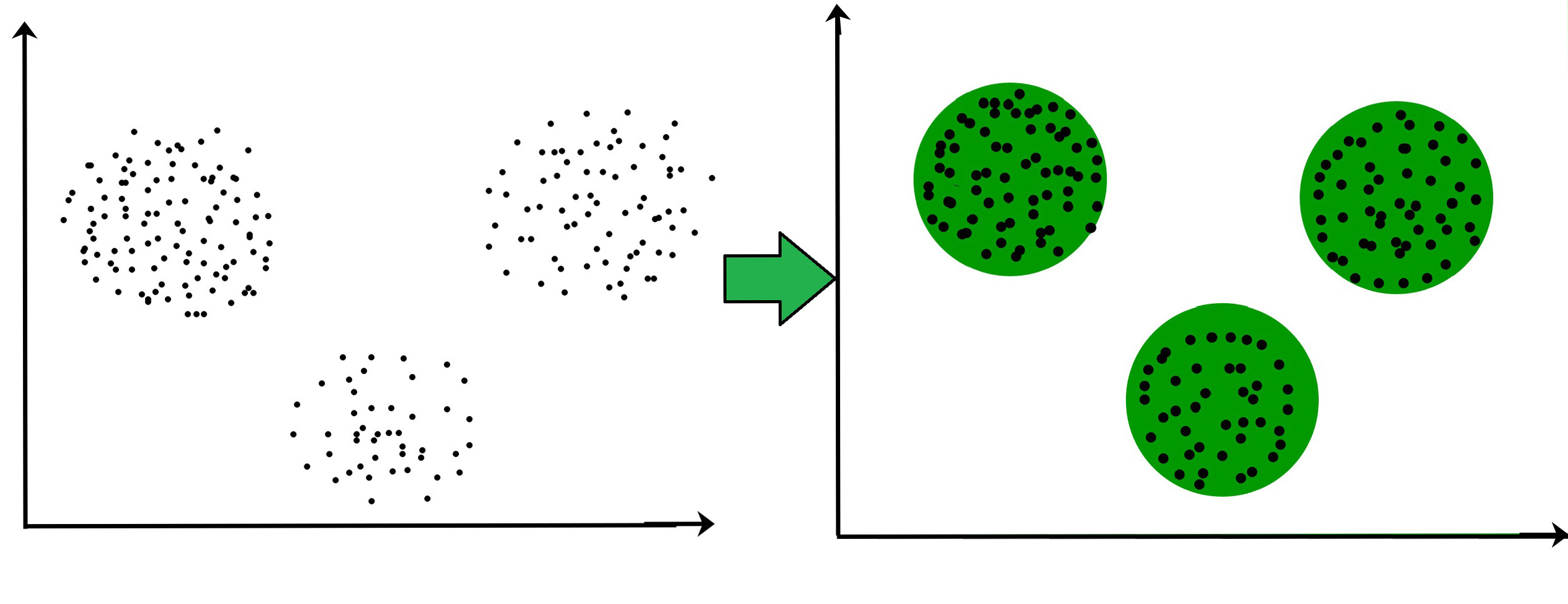

Figure 4 from Learning with Less Data Via Weakly Labeled Patch ... Fig. 4. A visualization how ProxyNCA works. [Left panel] Standard NCA compares one example with respect to all other examples (8 different pairings). [Right panel] In ProxyNCA, we only compare to the class proxies (2 different pairings). - "Learning with Less Data Via Weakly Labeled Patch Classification in Digital Pathology" Less is More: Labeled data just isn't as important anymore Supervised learning requires a lot of labeled data that most of us just don't have. Semi-supervised learning (SSL) is a different method that combines unsupervised and supervised learning to take advantage of the strengths of each. Here's one possible procedure (called SSL with "domain-relevance data filtering"): 1. Learning with less labels in Digital Pathology via Scribble Supervision ... Download Citation | Learning with less labels in Digital Pathology via Scribble Supervision from natural images | A critical challenge of training deep learning models in the Digital Pathology (DP ...

Learning with less labels. Learning With Less Labels - YouTube About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy & Safety How YouTube works Test new features Press Copyright Contact us Creators ... Domain Adaptation and Representation Transfer and Medical ... - Springer Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data First MICCAI Workshop, DART 2019, and First International Workshop, MIL3ID 2019, Shenzhen, Held in Conjunction with MICCAI 2019, Shenzhen, China, October 13 and 17, 2019, Proceedings Editors: Qian Wang, Fausto Milletari, Hien V. Nguyen, Learning image features with fewer labels using a semi-supervised deep ... Learning feature embeddings for pattern recognition is a relevant task for many applications. Deep learning methods such as convolutional neural networks can be employed for this assignment with different training strategies: leveraging pre-trained models as baselines; training from scratch with the target dataset; or fine-tuning from the pre-trained model. What Is Data Labeling in Machine Learning? In machine learning, a label is added by human annotators to explain a piece of data to the computer. This process is known as data annotation and is necessary to show the human understanding of the real world to the machines. Data labeling tools and providers of annotation services are an integral part of a modern AI project.

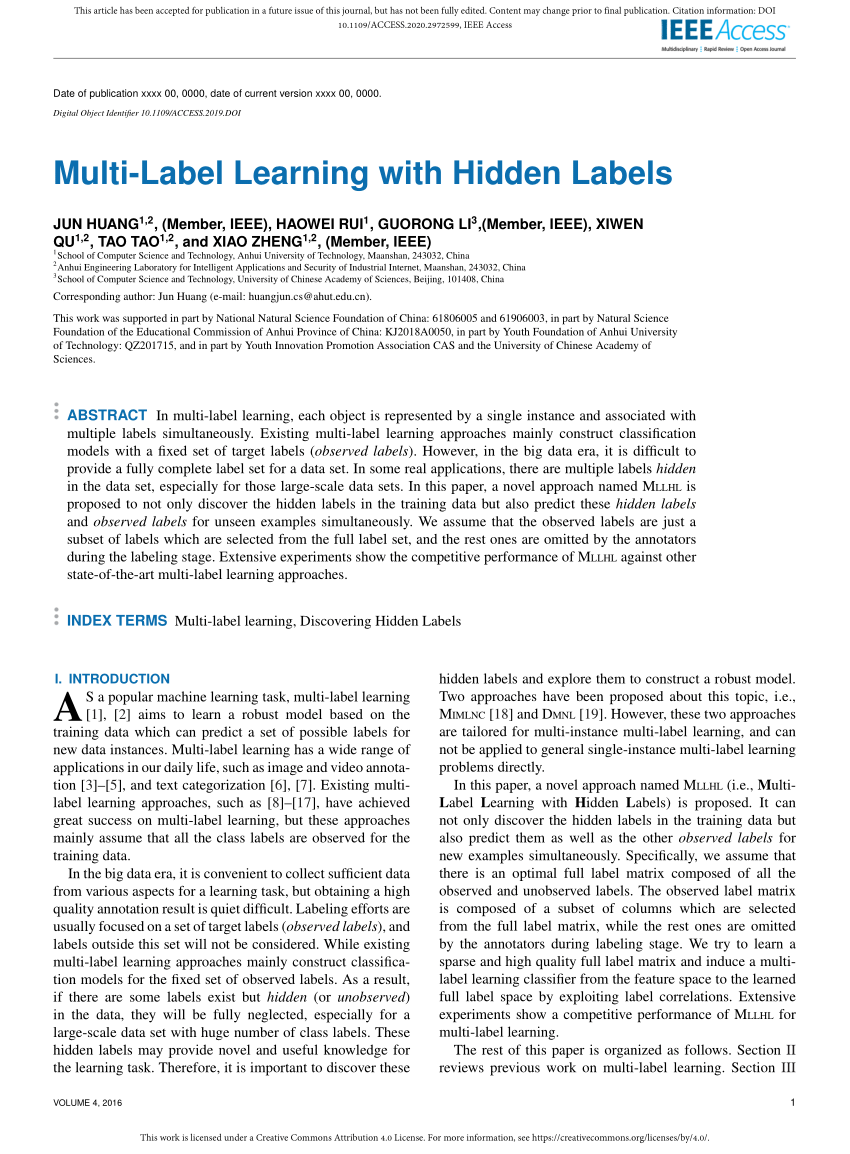

Figure 5 from Learning with Less Data Via Weakly Labeled Patch ... Fig. 5. A visualization of top-3 retrieval performance on the CRC dataset with features trained on the weakly labeled dataset. Correctly retrieved images (same class as query image) are highlighted with a green-box. Query images are selected randomly from the dataset. - "Learning with Less Data Via Weakly Labeled Patch Classification in Digital Pathology" Learning With Auxiliary Less-Noisy Labels - 百度学术 Learning With Auxiliary Less-Noisy Labels. Obtaining a sufficient number of accurate labels to form a training set for learning a classifier can be difficult due to the limited access to reliable label resources. Instead, in real-world applications, less-accurate labels, such as labels from nonexpert labelers, are often used. However, learning ... Learning with Limited Labeled Data, ICLR 2019 Increasingly popular approaches for addressing this labeled data scarcity include using weak supervision---higher-level approaches to labeling training data that are cheaper and/or more efficient, such as distant or heuristic supervision, constraints, or noisy labels; multi-task learning, to effectively pool limited supervision signal; data augmentation strategies to express class invariances; and introduction of other forms of structured prior knowledge. Learning with Less Labeling (LwLL) - darpa.mil The Learning with Less Labeling (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples.

Less Labels, More Learning | AI News & Insights Less Labels, More Learning Machine Learning Research Published Mar 11, 2020 Reading time 2 min read In small data settings where labels are scarce, semi-supervised learning can train models by using a small number of labeled examples and a larger set of unlabeled examples. A new method outperforms earlier techniques. Machine learning with less than one example - TechTalks A new technique dubbed "less-than-one-shot learning" (or LO-shot learning), recently developed by AI scientists at the University of Waterloo, takes one-shot learning to the next level. The idea behind LO-shot learning is that to train a machine learning model to detect M classes, you need less than one sample per class. Pro Tips: How to deal with Class Imbalance and Missing Labels Any of these classifiers can be used to train the malware classification model. Class Imbalance. As the name implies, class imbalance is a classification challenge in which the proportion of data from each class is not equal. The degree of imbalance can be minor, for example, 4:1, or extreme, like 1000000:1. Learning With Auxiliary Less-Noisy Labels - IEEE Xplore Although several learning methods (e.g., noise-tolerant classifiers) have been advanced to increase classification performance in the presence of label noise, only a few of them take the noise rate into account and utilize both noisy but easily accessible labels and less-noisy labels, a small amount of which can be obtained with an acceptable added time cost and expense.

Learning with Less Labels in Digital Pathology Via Scribble Supervision ... A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts. One way to tackle this issue is via transfer learning from the natural image domain (NI), where the annotation cost is considerably cheaper. Cross-domain transfer learning from NI to DP is shown to be successful via class labels [1]. One potential weakness ...

Darpa Learning With Less Label Explained - Topio Networks The DARPA Learning with Less Labels (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data needed to build the model or adapt it to new environments. In the context of this program, we are contributing Probabilistic Model Components to support LwLL.

Learning With Less Labels (lwll) - mifasr - Weebly DARPA Learning with Less Labels (LwLL)HR0Abstract Due: August 21, 2018, 12:00 noon (ET)Proposal Due: October 2, 2018, 12:00 noon (ET)Proposers are highly encouraged to submit an abstract in advance of a proposal to minimize effort and reduce the potential expense of preparing an out of scope proposal.Grants.govFedBizOppsDARPA is soliciting innovative research proposals in the area of machine ...

PDF Model Broad Agency Announcement (BAA) Learning with Less Labels program (LwLL) will divide the effort into two technical areas (TAs). TA1 will focus on the research and development of learning algorithms that learn and adapt efficiently; and TA2 will formally characterize machine learning problems and prove the limits of learning and adaptation. TA1: Learn and Adapt Efficiently

Learning with Less Labels Imperfect Data | Hien Van Nguyen 1st Workshop on Medical Image Learning with Less Labels and Imperfect Data 1) Self-supervised learning of inverse problem solvers in medical imaging [ slides] 2) Weakly Supervised Segmentation of Vertebral Bodies with Iterative Slice-propagation [ slides] 3) A Cascade Attention Network for Liver ...

Learning with Less Labels (LwLL) | Research Funding In order to achieve the massive reductions of labeled data needed to train accurate models, the Learning with Less Labels program (LwLL) will divide the effort into two technical areas (TAs). TA1 will focus on the research and development of learning algorithms that learn and adapt efficiently; and TA2 will formally characterize machine learning problems and prove the limits of learning and adaptation.

Image Classification and Detection - PLAI - Programming Languages for ... The DARPA Learning with Less Labels (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data needed to build the model or adapt it to new environments. In the context of this program, we are contributing Probabilistic Model Components to support LwLL.

The Positves and Negatives Effects of Labeling Students "Learning ... The "learning disabled" label can result in the student and educators reducing their expectations and goals for what can be achieved in the classroom. In addition to lower expectations, the student may develop low self-esteem and experience issues with peers. Low Self-Esteem. Labeling students can create a sense of learned helplessness.

LwFLCV: Learning with Fewer Labels in Computer Vision This special issue focuses on learning with fewer labels for computer vision tasks such as image classification, object detection, semantic segmentation, instance segmentation, and many others and the topics of interest include (but are not limited to) the following areas: • Self-supervised learning methods • New methods for few-/zero-shot learning

Learning with less labels in Digital Pathology via Scribble Supervision ... Download Citation | Learning with less labels in Digital Pathology via Scribble Supervision from natural images | A critical challenge of training deep learning models in the Digital Pathology (DP ...

Less is More: Labeled data just isn't as important anymore Supervised learning requires a lot of labeled data that most of us just don't have. Semi-supervised learning (SSL) is a different method that combines unsupervised and supervised learning to take advantage of the strengths of each. Here's one possible procedure (called SSL with "domain-relevance data filtering"): 1.

Figure 4 from Learning with Less Data Via Weakly Labeled Patch ... Fig. 4. A visualization how ProxyNCA works. [Left panel] Standard NCA compares one example with respect to all other examples (8 different pairings). [Right panel] In ProxyNCA, we only compare to the class proxies (2 different pairings). - "Learning with Less Data Via Weakly Labeled Patch Classification in Digital Pathology"

Post a Comment for "43 learning with less labels"